Apache restart with Vim autocmd

In order to execute a command right after saving a file in Vim, you can use the :autocmd command. Here is a quick example how it can be useful with Apache files.NB: on my system, Debian in this case, an Apache file is automatically recognized as filetype=apache.

augroup apache

autocmd BufWritePost */sites-enabled/* !/etc/init.d/apache2 restart

augroup END

Apache restart with Vim autocmd

In order to execute a command right after saving a file in Vim, you can use the :autocmd command. Here is a quick example how it can be useful with Apache files.NB: on my system, Debian in this case, an Apache file is automatically recognized as filetype=apache.

augroup apache

autocmd BufWritePost */sites-enabled/* !/etc/init.d/apache2 restart

augroup END

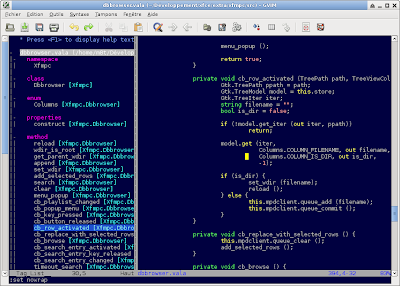

Vim and Vala

I once wrote a quick note about Vala and Vim (or Vim and Vala) and the use of the Tag List plugin. Here is a clean post about these two beasts.Vim — probably the best editor out there, at least always after trying out different editors I end up with Vim — has great plugins. However there is a lack of support for the Vala language. So here are two basic add-ins to include in the Vim editor.

Vala syntax

First there is no syntax color for this language. A quick fix is to use the C# syntax with the command :set filetype=cs. That works but is not ideal, ideal is to install a vala.syntax file, and there is one available on this GNOME Live! page.First download the file from this page and save it under ~/.vim/syntax/. Next add the following lines to your ~/.vimrc file:

" Filetypes

augroup filetypedetect

au! BufRead,BufNewFile *.vala,*.vapi setfiletype vala

augroup END

augroup vala

autocmd BufRead *.vala,*.vapi set tw=100 efm=%f:%1.%c-%[%^:]%#: %t%[%^:]%#: %m

augroup END

Tag List

Tag List is a powerful plugin that lets you explore classes or functions from a source file, also called a source code browser. The installation steps are simple, they are also available bellow, and again to get it working with Vala there is a small hack to include inside the ~/.vimrc file.First download the latest version of taglist from this page. Then uncompress the archive with, for example, the command line:

unzip -x taglist_45.zip -d $HOME/.vim/Then go inside ~/.vim/doc, run Vim and inside Vim execute the command :helptags .:

cd ~/.vim/docFinally add the following lines inside ~/.vimrc:

vim

:helptags .

" Work-around Tag List for Vala

let tlist_vala_settings='c#;d:macro;t:typedef;n:namespace;c:class;'.

'E:event;g:enum;s:struct;i:interface;'.

'p:properties;m:method'

Now Vim is ready for Vala, and it's possible to browse source code by typing the command :TlistToggle.

|

| Vim Vala Tag List |

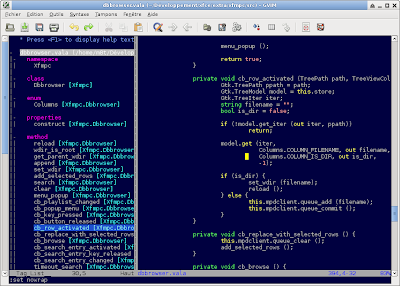

Vim and Vala

I once wrote a quick note about Vala and Vim (or Vim and Vala) and the use of the Tag List plugin. Here is a clean post about these two beasts.Vim — probably the best editor out there, at least always after trying out different editors I end up with Vim — has great plugins. However there is a lack of support for the Vala language. So here are two basic add-ins to include in the Vim editor.

Vala syntax

First there is no syntax color for this language. A quick fix is to use the C# syntax with the command :set filetype=cs. That works but is not ideal, ideal is to install a vim.syntax file, and there is one available on this GNOME Live! page.First download the file from this page and save it under ~/.vim/syntax. Next at the following lines to your ~/.vimrc file:

" Filetypes

augroup filetypedetect

au! BufRead,BufNewFile *.vala,*.vapi setfiletype vala

augroup END

augroup vala

autocmd BufRead *.vala,*.vapi set tw=100 efm=%f:%1.%c-%[%^:]%#:\ %t%[%^:]%#:\ %m

augroup END

Tag List

Tag List is a powerful plugin that lets you explore classes or functions from a source file, also called a source code browser. The installation steps are simple, they are also available bellow, and again to get it working with Vim there is a small hack to include inside the ~/.vimrc file.First download the latest version of taglist from this page. Than uncompress the archive with, for example, the command line:

unzip -x taglist_45.zip -d $HOME/.vim/Than go inside ~/.vim/doc, run Vim and inside Vim execute the command :helptags .:

cd ~/.vim/docFinally add the following lines inside ~/.vimrc:

vim

:helptags .

" Work-around Tag List for Vala

let tlist_vala_settings='c#;d:macro;t:typedef;n:namespace;c:class;'.

\ 'E:event;g:enum;s:struct;i:interface;'.

\ 'p:properties;m:method'

Now Vim is ready for Vala, and it's possible to browse source code by typing the command :TListToggle.

|

| Vim Vala Tag List |

.screenrc

So I pimped up my .screenrc, and since it's been a long time I didn't care about my hardstatus I keep the content here just in case I need it again in a few years...defscrollback 2048

startup_message off

caption always "%{= Wk}%-w%{= KW}%f%n %t%{-}%+w"

hardstatus off

hardstatus alwayslastline

hardstatus string "%{= ky}[ %H %l ]%=%{= kg}%{+b}[ %n %t ]%-=%{= ky}[ %D %d.%m.%Y %0c ]"

screen -t irssi 0

screen -t mutt 1

screen -t bubbie 2

.screenrc

So I pimped up my .screenrc, and since it's been a long time I didn't care about my hardstatus I keep the content here just in case I need it again in a few years...defscrollback 2048

startup_message off

caption always "%{= Wk}%-w%{= KW}%f%n %t%{-}%+w"

hardstatus off

hardstatus alwayslastline

hardstatus string "%{= ky}[ %H %l ]%=%{= kg}%{+b}[ %n %t ]%-=%{= ky}[ %D %d.%m.%Y %0c ]"

screen -t irssi 0

screen -t mutt 1

screen -t bubbie 2

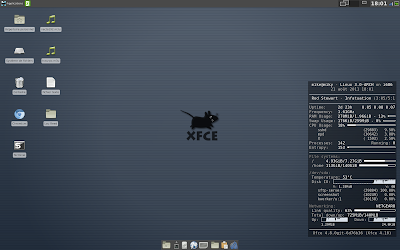

Xfce 4.8 with Conky

I have been following a short discussion on the IRC channel #xfce regarding an issue with the use of Conky and transparency. I didn't use Conky for a very long time, but since I knew it was possible to have Conky perfectly running, I gave it a shot again and since I did a fresh reinitialization of Xfce on my workstation, I tweaked the configuration file to my need. Now I have it running in the background and I'll most probably keep it.The configuration I was able to get for a good working Conky window with transparency is bellow. Of course I could tell you which combination doesn't work, with the why, but since there are so many of them I simply put a working one.

own_window yes # create a separate XWindow over the one from Xfdesktop

own_window_type desktop # the window cannot be moved or resized

own_window_hints undecorated,below,sticky,skip_taskbar,skip_pager # make it behave like it belongs to the desktop

own_window_argb_visual yes # true transparency, a compositor has to be active

own_window_argb_value 100 # make the background semi-transparent

double_buffer yes # avoid flickering

Here is a screenshot of the desktop with Conky in the bottom right corner, I made sure there is some I/O activity going on :-)

|

| Xfce with Conky |

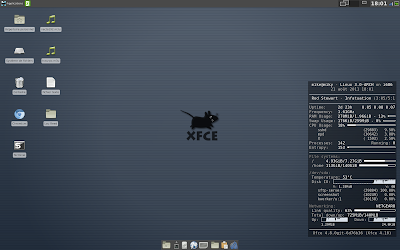

Xfce 4.8 with Conky

I have been following a short discussion on the IRC channel #xfce regarding an issue with the use of Conky and transparency. I didn't use Conky for a very long time, but since I knew it was possible to have Conky perfectly running, I gave it a shot again and since I did a fresh reinitialization of Xfce on my workstation, I tweaked the configuration file to my need. Now I have it running in the background and I'll most probably keep it.The configuration I was able to get for a good working Conky window with transparency is bellow. Of course I could tell you which combination doesn't work, with the why, but since there are so many of them I simply put a working one.

own_window yes # create a separate XWindow over the one from Xfdesktop

own_window_type desktop # the window cannot be moved or resized

own_window_hints undecorated,below,sticky,skip_taskbar,skip_pager # make it behave like it belongs to the desktop

own_window_argb_visual yes # true transparency, a compositor has to be active

own_window_argb_value 100 # make the background semi-transparent

double_buffer yes # avoid flickering

Here is a screenshot of the desktop with Conky in the bottom right corner, I made sure there is some I/O activity going on :-)

|

| Xfce with Conky |

Analysing a phishing email

I've been hit by several phishing emails in a short time the last week. Having time this morning I took the initiative to look at the headers from one email and the phishing location.The hosting server

The domains DNS zone is hosted on a particular network just like its website. Two different servers actually, but behind it seems to be a lucrative webhosting service where you can get your domain registered although it's not a registrar. All of this is hosted in Texas.

The email server

The email is originating from Italy. The FROM address is set up to show a truthful email (usually where you put the surname) with an inexistant email address behind that. The email address' domain name exists however and is hosted in France, but has no relation to the scam, at least the owner of this domain can't do anything about it.

The Return-Path shows a real email address with a different domain name but same network anyway. The domain name shows a dummy webpage "Under construction".

Who to contact?

All of them if you care, otherwise just let it go, because phishing pages are set up and down daily...

To contact the webhosting or email server, request the Whois information of the IP address and contact the abuse department, there is always an abuse section in the Whois of an IP address. You can also contact the domain name holder and/or look if the registrar or webhosting sevice has a dedicated abuse website page.

Of course it happens that an abuse email address forwards everything to the trash can. In order to have a chance to stop the scam, it is good to contact as many services as possible.

Analysing a phishing email

I've been hit by several phishing emails in a short time the last week. Having time this morning I took the initiative to look at the headers from one email and the phishing location.The hosting server

The domains DNS zone is hosted on a particular network just like its website. Two different servers actually, but behind it seems to be a lucrative webhosting service where you can get your domain registered although it's not a registrar. All of this is hosted in Texas.

The email server

The email is originating from Italy. The FROM address is set up to show a truthful email (usually where you put the surname) with an inexistant email address behind that. The email address' domain name exists however and is hosted in France, but has no relation to the scam, at least the owner of this domain can't do anything about it.

The Return-Path shows a real email address with a different domain name but same network anyway. The domain name shows a dummy webpage "Under construction".

Who to contact?

All of them if you care, otherwise just let it go, because phishing pages are set up and down daily...

To contact the webhosting or email server, request the Whois information of the IP address and contact the abuse department, there is always an abuse section in the Whois of an IP address. You can also contact the domain name holder and/or look if the registrar or webhosting sevice has a dedicated abuse website page.

Of course it happens that an abuse email address forwards everything to the trash can. In order to have a chance to stop the scam, it is good to contact as many services as possible.